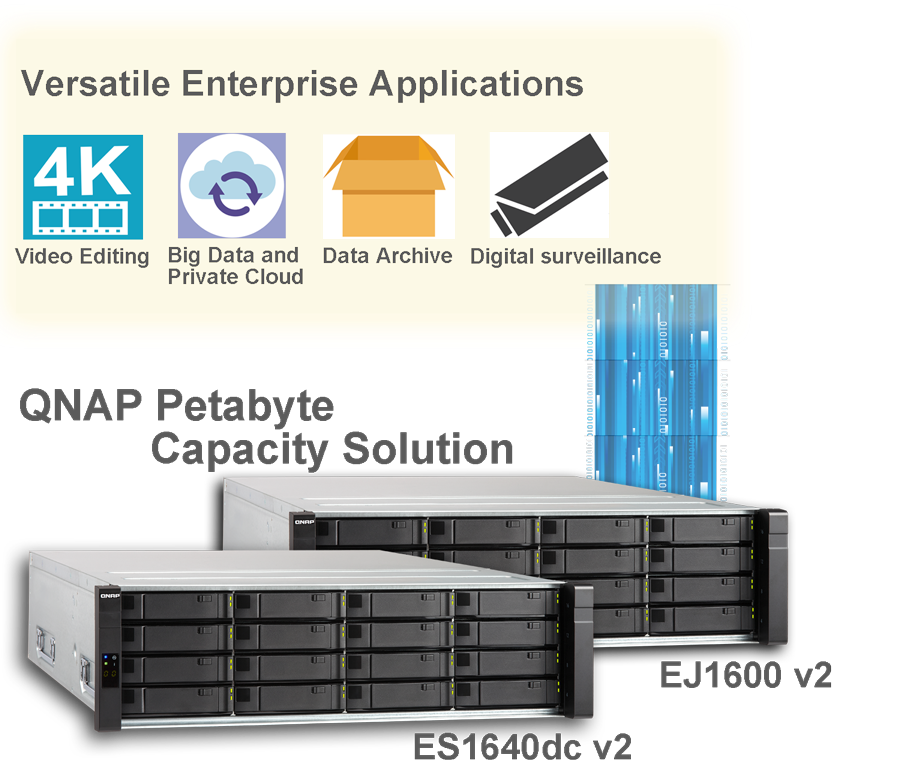

Use QNAP QES series to build a large-capacity storage system

Technical overview and application scenarios

High-availability file servers, enterprise cloud storage, online video streaming, digital monitoring storage and similar applications all require large-capacity storage devices. General-use NAS has limitations in hardware and software architecture, and it is difficult to adapt to enterprise-class high-pressure, high availability environments. Traditional file systems are also not optimized for large capacities with size/capacity limits, they feature no integrated data integrity checks, and also lack other features.

The QNAP ES NAS has features such as nearly non-stop high-availability, self-healing, data compression and deduplication, unlimited snapshots, instantaneous construction of disk arrays and more. In addition to all the necessary functions, it also provides the most cost-effective large capacity storage solution.

Example scenario

Construction of a Large Capacity Storage Pool

Prepare the disk expansion enclosure

The QNAP QES system provides cross-expansion closure storage pool construction. The ES1640dc has 16 built-in drive bays, and can be connected to multiple expansion enclosures to scale up the storage capacity. The dual-controller ES NAS and its expansion enclosure both have 16 drive bays. Based on the dual-controller ES1640dc series design, up to 7 expansion enclosures can be connected to provide a single system with up to 128 drive bays.

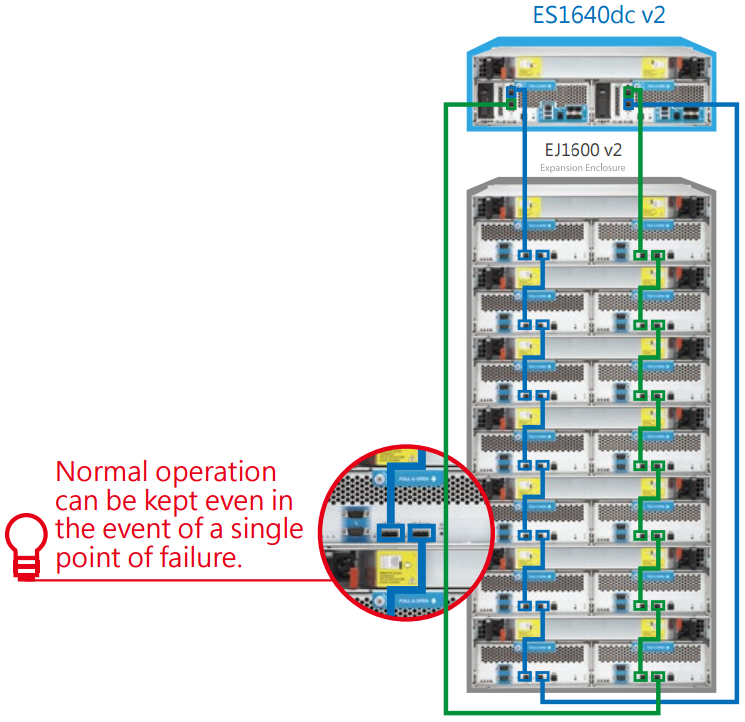

The ES1640dc v2 supports the EJ1600 v2 expansion enclosure. This expansion enclosure connects to the ES NAS via SAS 12Gb/s, providing lower latency than USB. The EJ series expansion enclosures also use a dual-controller design and can support each other. Each group of controllers has 2-port SAS expansion ports, so that ES NAS and EJ expansion enclosures can be connected in a dual-loop, 4-SAS-port architecture. The total interconnected bandwidth can reach up to 48 Gbps to enable a high-performance and reliable high-availability environment.

Recommended connection architecture between QNAP ES NAS and EJ expansion enclosures

ES NAS and EJ series expansion enclosures both have a dual-controller architecture. Each controller group has built-in 2 external SAS ports. To effectively build a dual-loop topology, it is recommended that each group chassis be connected according to the above diagram to ensure the storage system is still able to maintain normal operations in the event of a single point of failure. After completing the dual-loop connection, the NAS controller on either side of the system will be fault tolerant with the expansion enclosure controller on either side. Even if any of the connections are interrupted, the system will still be able to access data from another group.

Note: The bottom JBOD will require two longer SAS lines to connect back to the ES NAS host (Loop Back). For detailed specifications of hardware and cables, please refer to the materials list table below.

Installable disks and corresponding materials list

| QNAP ES NAS | QNAP EJ expansion enclosure |

0.5M SAS short cable | Loop back SAS long cable | Total number of SAS cables | Installable number of disks | Max. raw capacity* |

|---|---|---|---|---|---|---|

| 1 | 0 | 0 | 0 | 0 | 16 | 160 TB |

| 1 | 1 | 2 | 2 (0.5M) | 4 | 32 | 320 TB |

| 1 | 2 | 4 | 2 (1M) | 6 | 48 | 480 TB |

| 1 | 3 | 6 | 2 (1M) | 8 | 64 | 640 TB |

| 1 | 4 | 8 | 2 (1M) | 10 | 80 | 880 TB |

| 1 | 5 | 10 | 2 (2M) | 12 | 96 | 960 TB |

| 1 | 6 | 12 | 2 (2M) | 14 | 112 | 1120 TB (1.12PB) |

| 1 | 7 | 14 | 2 (2M) | 16 | 128 | 1280 TB (1.28PB) |

* Based on using 10 TB hard drives.

To estimate the available capacity with specific RAID types, use the ES NAS ZFS Capacity Calculation Tool:

(https://enterprise-nas.qnap.com/en/calculator/)

Need SSD?

Dual-control series ES NAS system supports using SSD as a read cache. When configuring an ES NAS, the small capacity, cost, and use of a drive bay must be taken into consideration when using SSD. SSD provides low-latency responses and IOPS that vastly outperforms hard drives, making them useful for applications such as databases and virtual desktops. If the main application of the ES NAS is a file server, high capacity and read/write performance should be prioritized bandwidth, making hard drives more suitable than SSD.

Planning the RAID architecture and hot spares

As storage systems store more data, the chance of data loss through hardware failure also increases. Reliability and backup plans thus become exceedingly important. Before building the overall system, we can plan RAID architectures according to the importance of data, capacity utilization, and tolerance level for data loss.

As QES is based on ZFS, there is greater focus on data protection, including Global Hot Spare technology, which allows for the simultaneous failure of up to three drives in RAID TP mode, 3-times data protection Triple-Mirror mode and more. In environments that require multiple backups, QES allows you to sacrifice performance and capacity utilization to achieve absolute reliability.

For general use, it is recommended to use ZFS RAID Group architecture. Using 10 to 15 disks for each NAS/expansion enclosure as a unit to establish RAID 6 mode and set aside a blank disk with global hot backup technology, you will be able to achieve both complete data protection and disaster fault tolerance. The recommended setting is as shown in the table and figures below.

128 disk RAID configuration example

| Where | Installed number of disks | RAID type | Hot spare |

|---|---|---|---|

| ES NAS | 16 | 11 RAID 6 (4 system disks are not included in the storage pool) |

1 |

| EJ JBOD#1 | 16 | 15 RAID 6 | 1 |

| EJ JBOD#2 | 16 | 15 RAID 6 | 1 |

| EJ JBOD#3 | 16 | 15 RAID 6 | 1 |

| EJ JBOD#4 | 16 | 15 RAID 6 | 1 |

| EJ JBOD#5 | 16 | 15 RAID 6 | 1 |

| EJ JBOD#6 | 16 | 15 RAID 6 | 1 |

| EJ JBOD#7 | 16 | 15 RAID 6 | 1 |

| Total | 128 | 8 groups of RAID 6 groups RAID 60 storage pool |

8 |

When creating a RAID, it is recommended to reserve a blank hard disk without including it into any array. Depending on the mechanism of the QES system architecture, these reserved disks will be treated as hot spares, without additional special set up. If the system encounters a hard disk failure, the global hot backup mechanism will be triggered, it will automatically capture any blank disk on ES NAS or JBOD and actively rebuild the RAID.

NAS Drive configuration:

JBOD # 1 drive configuration:

JBOD # 7 drive configuration:

From top to bottom, the figure respectively shows the recommended configuration for 1 ES NAS and 7 EJ JBOD. If the first ES NAS is configured with four SSD as a system cache, the RAID 6 array can be made up of 11 disks and 1 reserved hot spare hard disk. If no SSD cache is deployed in the system, then 15 disks can be used to build a RAID 6 array; the rest of expansion closures all use a 15 disk RAID 6 array, with 1 hot spare hard disk in its configuration.

How to build RAID 60

Based on the above architecture, after setting up the first RAID group, more RAID groups can be added through storage pool expansion (Expand Pool). As each RAID group is Striped in relation to each other, when data is written, it will be written to several RAID groups at the same time, and this is also true for read, so RAID 60 storage pool can be created in QES to achieve both efficiency and reliability.

Basic application and performance data

In this section, we create a super storage pool with capacity up to hundreds of terabytes based on the above architecture, and mount it for corresponding application scenarios through the shared folder as an example. The test scenario simulates basic application scenario and measures its performance data.

Test platform

| Storage system | |

|---|---|

| NAS | 1 x QNAP ES1640dc v2 |

| Expansion enclosures | 7 x QNAP EJ1600 v2 |

| Interconnection method | 4 x Mini-SAS 12Gb/s, Dual Loop |

| SSD model | 4 x Samsung PM863 240GB |

| HDD model 1 | 12 x HGST HUH721010AL5200 10TB |

| HDD model 2 | 112 x Seagate ST8000NM0075 8TB |

| Total number of drives | 4 x SSD + 124 x HDD |

| NAS data port network card | Intel X520-SR2 SFP+ |

| SFP+ connection method | Optical fiber |

| SFP+ GBIC | Intel FTLX8571D3BCV |

| System version | QES 1.1.3 |

| Storage pool structure | 8 RAID 6 groups, a total of 124 HDDs |

Ten Windows Server 2012 R2 hosts mount the same shared folder and concurrently run IO tests, and test the throughput provided by ES NAS simulating concurrent write/access to/from multiple hosts.

Low capacity level read/write performance

It is clear from the following measured data that SSD cache acceleration is significantly helpful for block random read performance.

Single client test

| Test item | Load mode | No SSD Caching | With SSD Caching |

|---|---|---|---|

| AJA System Test sequential read | HD-1080i, 16GB, 10bit YUV | 603 MB/s | 410 MB/s |

| Iometer 256K sequential read | Thread=1, Queue depth=1 | 304.36 MB/s | 236.71 MB/s |

| Iometer 4K random read | Thread=1, Queue depth=1 | 48.8 IOPS | 108.29 IOPS |

| Iometer 4K random read | Thread=1, Queue depth=16 | 974.5 IOPS | 4434 IOPS |

| AJA System Test sequential write | HD-1080i, 16GB, 10bit YUV | 520 MB/s | 500 MB/s |

| Iometer 256K sequential write | Thread=1, Queue depth=1 | 233.18 MB/s | 295.78 MB/s |

| Iometer 4K random write | Thread=1, Queue depth=1 | 1474 IOPS | 2959.64 IOPS |

| Iometer 4K random write | Thread=1, Queue depth=16 | 2281 IOPS | 2801.89 IOPS |

Multi-client concurrent access test

| Test item | Load mode | No SSD Caching | With SSD Caching |

|---|---|---|---|

| AJA System Test sequential read | HD-1080i, 16GB, 10bit YUV | N/A(note) | N/A(note) |

| Iometer 256K sequential read | Thread=1, Queue depth=1 | 1017.34 MB/s | 1017.17 MB/s |

| Iometer 4K random read | Thread=1, Queue depth=1 | 476.1 IOPS | 7027.98 IOPS |

| Iometer 4K random read | Thread=1, Queue depth=16 | 974.76 IOPS | 18821.64 IOPS |

| AJA System Test sequential write | HD-1080i, 16GB, 10bit YUV | N/A(note) | N/A(note) |

| Iometer 256K sequential write | Thread=1, Queue depth=1 | 796.38 MB/s | 659.79 MB/s |

| Iometer 4K random write | Thread=1, Queue depth=1 | 2628.61 IOPS | 3200.6 IOPS |

| Iometer 4K random write | Thread=1, Queue depth=16 | 2602.41 IOPS | 2863.7 IOPS |

Note: AJA System Test does not support multi-client concurrent access testing, so no data is available.

Test writing large number of files

To meet the requirements for large number of file loads, the test in this section uses Disk tools (File Generator) to adjust file size, quantity and content (duplicate or random text), to simulate the performance in writing a large number of files.

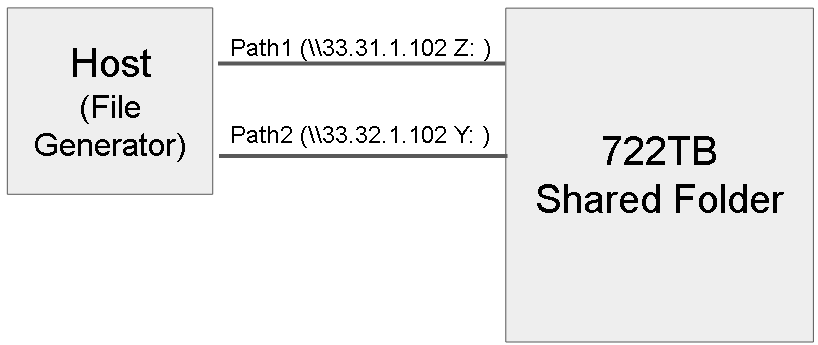

Network connection method

In terms of network topology, two shared folders on the ES NAS are mounted on the server host via two 10Gb network ports.

Test load source

| Path 1 (\\33.31.1.102) (Z:\) |

Path 2 (\\33.31.1.102) (Z:\1) |

Path 3 (\\33.32.1.102) (Y:\2) |

||

|---|---|---|---|---|

| Write load 1 Single file size 30MB |

Write load 3 Single file size 40MB |

Write load 5 Single file size 20MB |

Write load 7 Single file size 60MB |

Write load 9 Single file size 50MB |

| Write load 2 Single file size 30MB |

Write load 4 Single file size 50MB |

Write load 6 Single file size 50MB |

Write load 8 Single file size 80MB |

Write load 10 Single file size 100MB |

To verify high-intensity write load, the generated files will range between 20-100MB, with completely random file content. The files are then written to the storage system for a long time, and the results are as follows.

Write test result

- Total amount of data written: 722TB

- Total write time: 713 hours

- Average write speed: 294.75MB/s (about 1TB per hour)

- NAS CPU usage: 50 ~ 55%

- Host CPU usage: 80 ~ 90%

- The actual low-level writing bandwidth of ZFS file system is 850 ~ 1000MB/s, IOPS is about 9K ~ 11K, the average IO size is 94KB

- ZFS low-level write magnification is around 3 times

High capacity level performance

After a long period of intensive writing, the total capacity and the number of files will reach a massive number. Monitoring the storage system's read performance in such a condition, the simulated stability under intensive use is as follows.

Read Performance measurement

| Test item | Load mode | Result |

|---|---|---|

| Iometer 256K sequential read | Thread=1, Queue depth=1 | 421.40 MB/s |

| Thread=1, Queue depth=2 | 719.17 MB/s | |

| Thread=1, Queue depth=4 | 751.45 MB/s | |

| Thread=4, Queue depth=4 | 1084.06 MB/s | |

| Iometer 4K random read | Thread=1, Queue depth=1 | 2240.47 IOPS |

| Thread=1, Queue depth=4 | 8225.18 IOPS | |

| Thread=4, Queue depth=4 | 14141.09 IOPS |

In the actual measurement, under a near saturation level of storage capacity, the NAS read performance can still be maintained at a certain level, and the highest sequential read performance is still close to the upper limit of 10Gb network bandwidth, effectively providing long-term stable output performance.

Disaster recovery and array reconstruction

While building a large capacity storage system where storage pool require a lot of hard disk support and dozens or even hundreds of hard disks might be running concurrently, responding to hard disk failure becomes an important issue. As mentioned in the above section, QES has multi-level data protection through hardware and software technology, even if a hard disk fails, it still has 2 security mechanisms to ensure data reliability: global hot backup and fast data recovery technology.

Disk failure handling process

Using the above test architecture as an example, the storage pool consists of 8 RAID groups composed of a total of 116 hard disks, with 8 hard disks set aside as hot spares. If disk usage reaches up to 722 TB and the capacity level has reached 99.97% saturation, if a hard disk fails occurs, QES will immediately grab the standby hard disk through the global hot backup mechanism, and use fast data recovery technology to reconstruct data to minimize the impact of the disk failure.

Array reconstruction measurement

In a near-saturated storage pool, if an 8TB hard disk fails, the system will be reconstructed from another 8 TB hot spare. The following test data shows the approximate time required for data recovery.

| Storage pool capacity level | Used space | 8TB HDD failure recovery time |

|---|---|---|

| 99.97% | 722.0 TB/722.2 TB | 276H 23M (about 11.5 days) |

Expansion enclosure failure handling

In the event of an expansion enclosure failure, in order to ensure integrity of existing data, QES will actively protect the storage pool through offline data protection mechanisms. When the expansion enclosure returns online and the storage pool is restored, it will continue to provide service as normal.

Conclusion

QNAP QES has many features, such as multi-level data protection technology, large single storage space, real-time data compression, data deduplication and more. These are all useful features for creating a large capacity application environment. In combination with a variety of RAID and storage pool configurations, it can effectively help users flexibly plan for their storage. Even in the event of accidents and disasters, it can use rapid reconstruction technology to reduce the impact of hardware failure as far as possible through the recovery process. It has multi-port 10Gb network interface, supports iSCSI, NFS, CIFS and other communication protocols, and is well suited for a variety of application scenarios.